主机列表

Ansible 18.163.102.197/172.31.34.153

k8s-01 18.163.35.70/172.31.43.3

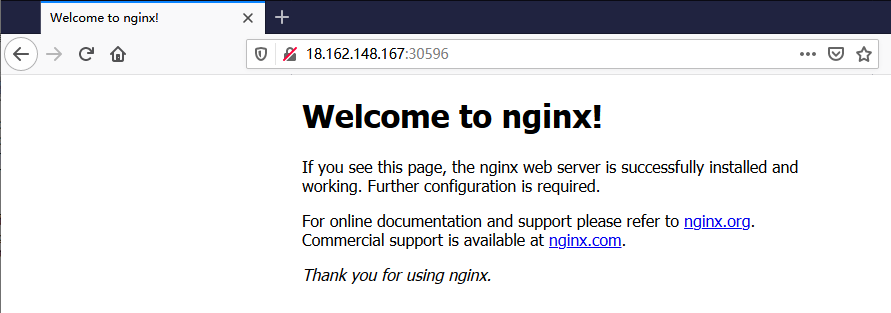

k8s-02 18.162.148.167/172.31.37.84

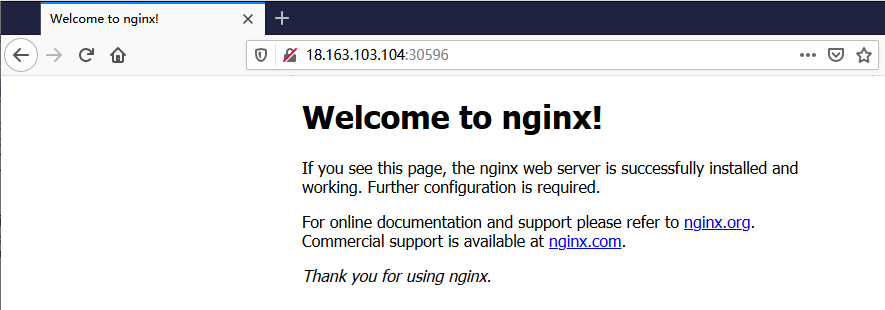

k8s-03 18.163.103.104/172.31.37.22

Amazon EC2主机默认禁用root登录及密码验证的处理

sudo sed -i 's/^\#PermitRootLogin yes/PermitRootLogin yes/' /etc/ssh/sshd_config

sudo sed -i 's/^PasswordAuthentication no/PasswordAuthentication yes/' /etc/ssh/sshd_config

sudo systemctl restart sshd

查看本地主机Ansible版本信息

[root@ip-172-31-34-153 ~]# ansible --version

ansible 2.9.5

config file = /etc/ansible/ansible.cfg

configured module search path = [u'/root/.ansible/plugins/modules', u'/usr/share/ansible/plugins/modules']

ansible python module location = /usr/lib/python2.7/site-packages/ansible

executable location = /bin/ansible

python version = 2.7.5 (default, Oct 30 2018, 23:45:53) [GCC 4.8.5 20150623 (Red Hat 4.8.5-36)]

[root@ip-172-31-34-153 ~]#

禁用本地主机严格密钥检查

[root@ip-172-31-34-153 ~]# vi /etc/ssh/ssh_config

StrictHostKeyChecking no

生成密钥对并分发公钥到远程主机

[root@ip-172-31-34-153 ~]# ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

Generating public/private rsa key pair.

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:Gj5nl42xywRn0/s9hjBeACErGJWjQhfoDuEDT2yjYfE root@ip-172-31-34-153.ap-east-1.compute.internal

The key's randomart image is:

+---[RSA 2048]----+

| oooo... .. |

|++*.oo o. |

|*B.E.... . |

|o=.. . o |

|o o . S = o |

| . . o + X o |

| + o B * . |

| + + o o + |

| o o o|

+----[SHA256]-----+

[root@ip-172-31-34-153 ~]# cat .ssh/id_rsa.pub

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC29DSROHgwWlucHoL/B+S/4Rd1KsVEbYLmM4p0+Ptx4NjGooEhrnNjIhpKmPNI5zvGtganSia2A7Vsp5Y+IVOgThRjzptQQzmbEloIqv6SsJRDyrUQIPV9dv3jv5pvbtAN0D5rh1AATPh0FNBtnkvm6HLowjueKdE6pBiq74NTPc5jfDuvwq2S5s4Ztnw9NsTuIlIiC7STCfuDo7NoxRVl+QumD12tW52CPd4ZjA4vg4v7xr/BF/rRxdFuG6+740s2kO1EZNaUOoi99qMLQiScOK+SLw+/tN66EmZC0uMeYlDiZZ1VsLb2MMd11CJDWSZ9SZbd1dHQbXywUbj0tRQF root@ip-172-31-34-153.ap-east-1.compute.internal

[root@ip-172-31-34-153 ~]#

分发公钥

[root@ip-172-31-34-153 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@18.163.35.70

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@18.163.35.70's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@18.163.35.70'"

and check to make sure that only the key(s) you wanted were added.

[root@ip-172-31-34-153 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@18.162.148.167

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@18.162.148.167's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@18.162.148.167'"

and check to make sure that only the key(s) you wanted were added.

[root@ip-172-31-34-153 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@18.163.103.104

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@18.163.103.104's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@18.163.103.104'"

and check to make sure that only the key(s) you wanted were added.

[root@ip-172-31-34-153 ~]#

配置Ansible主机清单

[root@ip-172-31-34-153 ~]# mkdir kube-cluster

[root@ip-172-31-34-153 ~]# cd kube-cluster/

[root@ip-172-31-34-153 kube-cluster]# vi hosts

[masters]

master ansible_host=18.163.35.70 ansible_user=root

[workers]

worker1 ansible_host=18.162.148.167 ansible_user=root

worker2 ansible_host=18.163.103.104 ansible_user=root

准备基本环境Playbook配置文件(k8s-01/k8s-02/k8s-03)

[root@ip-172-31-34-153 kube-cluster]# vi kube-dependencies.yaml

- hosts: all

become: yes

tasks:

- name: Install yum utils

yum:

name: yum-utils

state: latest

- name: Install device-mapper-persistent-data

yum:

name: device-mapper-persistent-data

state: latest

- name: Install lvm2

yum:

name: lvm2

state: latest

- name: Add Docker repo

get_url:

url: https://download.docker.com/linux/centos/docker-ce.repo

dest: /etc/yum.repos.d/docer-ce.repo

- name: install Docker

yum:

name: docker-ce

state: latest

update_cache: true

- name: start Docker

service:

name: docker

state: started

enabled: yes

- name: disable SELinux

command: setenforce 0

- name: disable SELinux on reboot

selinux:

state: disabled

- name: ensure net.bridge.bridge-nf-call-ip6tables is set to 1

sysctl:

name: net.bridge.bridge-nf-call-ip6tables

value: 1

state: present

- name: ensure net.bridge.bridge-nf-call-iptables is set to 1

sysctl:

name: net.bridge.bridge-nf-call-iptables

value: 1

state: present

- name: add Kubernetes' YUM repository

yum_repository:

name: Kubernetes

description: Kubernetes YUM repository

baseurl: https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

gpgkey: https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

gpgcheck: yes

- name: install kubelet

yum:

name: kubelet-1.17.3

state: present

update_cache: true

- name: install kubeadm

yum:

name: kubeadm-1.17.3

state: present

- name: start kubelet

service:

name: kubelet

enabled: yes

state: started

- hosts: master

become: yes

tasks:

- name: install kubectl

yum:

name: kubectl-1.17.3

state: present

allow_downgrade: yes

执行

[root@ip-172-31-34-153 kube-cluster]# ansible-playbook -i ./hosts kube-dependencies.yaml

PLAY [all] *****************************************************************************************************

TASK [Gathering Facts] *****************************************************************************************

ok: [worker1]

ok: [worker2]

ok: [master]

TASK [Install yum utils] ***************************************************************************************

changed: [worker1]

changed: [master]

changed: [worker2]

TASK [Install device-mapper-persistent-data] *******************************************************************

changed: [worker1]

changed: [worker2]

changed: [master]

TASK [Install lvm2] ********************************************************************************************

changed: [worker2]

changed: [worker1]

changed: [master]

TASK [Add Docker repo] *****************************************************************************************

changed: [worker2]

changed: [worker1]

changed: [master]

TASK [install Docker] ******************************************************************************************

changed: [worker1]

changed: [worker2]

changed: [master]

TASK [start Docker] ********************************************************************************************

changed: [worker1]

changed: [master]

changed: [worker2]

TASK [disable SELinux] *****************************************************************************************

changed: [worker2]

changed: [worker1]

changed: [master]

TASK [disable SELinux on reboot] *******************************************************************************

[WARNING]: SELinux state change will take effect next reboot

changed: [worker2]

changed: [worker1]

changed: [master]

TASK [ensure net.bridge.bridge-nf-call-ip6tables is set to 1] **************************************************

[WARNING]: The value 1 (type int) in a string field was converted to u'1' (type string). If this does not look

like what you expect, quote the entire value to ensure it does not change.

changed: [worker2]

changed: [worker1]

changed: [master]

TASK [ensure net.bridge.bridge-nf-call-iptables is set to 1] ***************************************************

changed: [worker1]

changed: [worker2]

changed: [master]

TASK [add Kubernetes' YUM repository] **************************************************************************

changed: [worker2]

changed: [worker1]

changed: [master]

TASK [install kubelet] *****************************************************************************************

changed: [worker1]

changed: [worker2]

changed: [master]

TASK [install kubeadm] *****************************************************************************************

changed: [worker1]

changed: [worker2]

changed: [master]

TASK [start kubelet] *******************************************************************************************

changed: [worker2]

changed: [worker1]

changed: [master]

PLAY [master] **************************************************************************************************

TASK [Gathering Facts] *****************************************************************************************

ok: [master]

TASK [install kubectl] *****************************************************************************************

ok: [master]

PLAY RECAP *****************************************************************************************************

master : ok=17 changed=14 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

worker1 : ok=15 changed=14 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

worker2 : ok=15 changed=14 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

[root@ip-172-31-34-153 kube-cluster]#

准备主节点Palybook配置文件(k8s-01)

[root@ip-172-31-34-153 kube-cluster]# vi master.yaml

- hosts: master

become: yes

tasks:

- name: initialize the cluster

shell: kubeadm init --pod-network-cidr=10.244.0.0/16 >> cluster_initialized.txt

args:

chdir: $HOME

creates: cluster_initialized.txt

- name: create .kube directory

become: yes

become_user: centos

file:

path: $HOME/.kube

state: directory

mode: 0755

- name: copy admin.conf to user's kube config

copy:

src: /etc/kubernetes/admin.conf

dest: /home/centos/.kube/config

remote_src: yes

owner: centos

- name: install Pod network

become: yes

become_user: centos

shell: kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml >> pod_network_setup.txt

args:

chdir: $HOME

creates: pod_network_setup.txt

执行

[root@ip-172-31-34-153 kube-cluster]# ansible-playbook -i ./hosts master.yaml

PLAY [master] **************************************************************************************************

TASK [Gathering Facts] *****************************************************************************************

ok: [master]

TASK [initialize the cluster] **********************************************************************************

ok: [master]

TASK [create .kube directory] **********************************************************************************

[WARNING]: Module remote_tmp /home/centos/.ansible/tmp did not exist and was created with a mode of 0700, this

may cause issues when running as another user. To avoid this, create the remote_tmp dir with the correct

permissions manually

changed: [master]

TASK [copy admin.conf to user's kube config] *******************************************************************

changed: [master]

TASK [install Pod network] *************************************************************************************

changed: [master]

PLAY RECAP *****************************************************************************************************

master : ok=5 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

[root@ip-172-31-34-153 kube-cluster]#

使用非特权用户centos验证Kubernetes集群及主节点状态

[centos@k8s-01 ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-01 Ready master 155m v1.17.3

[centos@k8s-01 ~]$

准备工作节点Playbook配置文件(k8s-02/k8s-03)

[root@ip-172-31-34-153 kube-cluster]# vi workers.yaml

- hosts: master

become: yes

gather_facts: false

tasks:

- name: get join command

shell: kubeadm token create --print-join-command

register: join_command_raw

- name: set join command

set_fact:

join_command: "{{ join_command_raw.stdout_lines[0] }}"

- hosts: workers

become: yes

tasks:

- name: join cluster

shell: "{{ hostvars['master'].join_command }} --ignore-preflight-errors all >> node_joined.txt"

args:

chdir: $HOME

creates: node_joined.txt

执行

[root@ip-172-31-34-153 kube-cluster]# ansible-playbook -i hosts workers.yaml

PLAY [master] **************************************************************************************************

TASK [get join command] ****************************************************************************************

changed: [master]

TASK [set join command] ****************************************************************************************

ok: [master]

PLAY [workers] *************************************************************************************************

TASK [Gathering Facts] *****************************************************************************************

ok: [worker2]

ok: [worker1]

TASK [join cluster] ********************************************************************************************

changed: [worker2]

changed: [worker1]

PLAY RECAP *****************************************************************************************************

master : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

worker1 : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

worker2 : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

[root@ip-172-31-34-153 kube-cluster]#

验证集群状态

[centos@k8s-01 ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-01 Ready master 159m v1.17.3

k8s-02 Ready <none> 41s v1.17.3

k8s-03 Ready <none> 41s v1.17.3

[centos@k8s-01 ~]$

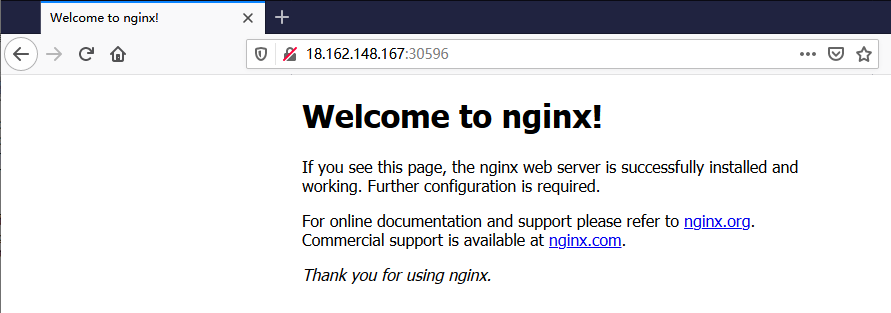

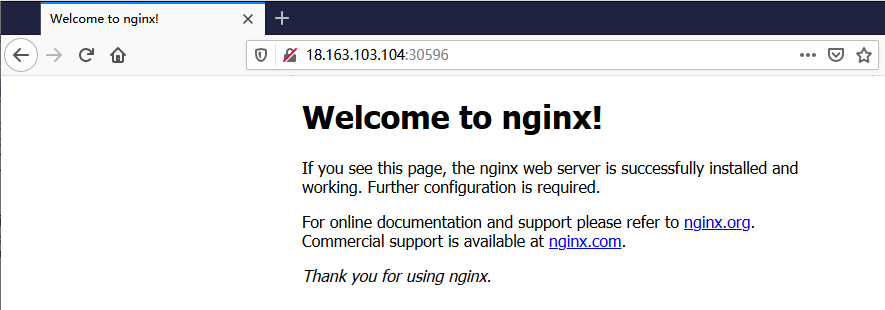

部署容器化应用程序进行测试

[centos@k8s-01 ~]$ kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

[centos@k8s-01 ~]$

[centos@k8s-01 ~]$ kubectl expose deploy nginx --port 80 --target-port 80 --type NodePort

service/nginx exposed

[centos@k8s-01 ~]$

[centos@k8s-01 ~]$ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 179m

nginx NodePort 10.109.120.31 <none> 80:30596/TCP 15s

[centos@k8s-01 ~]$

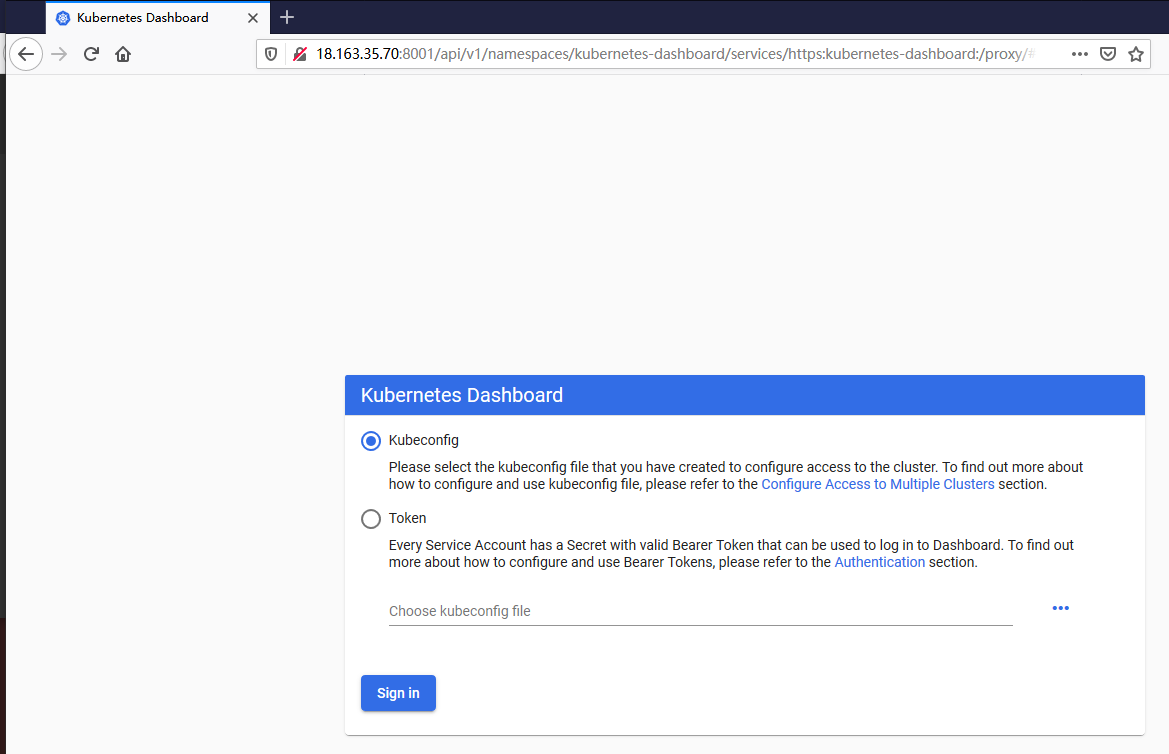

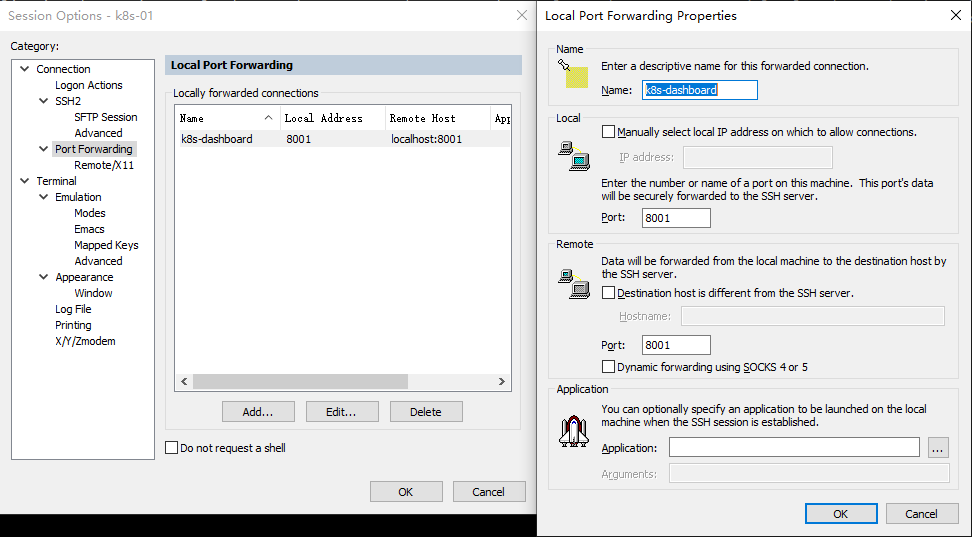

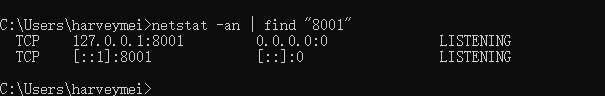

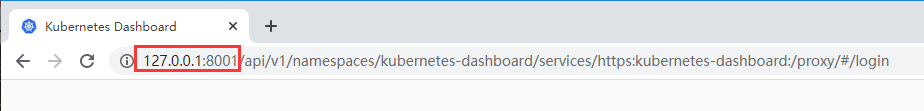

使用浏览器访问

删除已部署的容器化应用

[centos@k8s-01 ~]$ kubectl delete service nginx

service "nginx" deleted

[centos@k8s-01 ~]$ kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h4m

[centos@k8s-01 ~]$

[centos@k8s-01 ~]$ kubectl delete deployment nginx

deployment.apps "nginx" deleted

[centos@k8s-01 ~]$ kubectl get deployments

No resources found in default namespace.

[centos@k8s-01 ~]$

客户端命令行接口配置文件详情(基于PKI体系的服务器及客户端证书验证)

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN5RENDQWJDZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJd01ETXdOekE1TVRNeU1Wb1hEVE13TURNd05UQTVNVE15TVZvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTHl4Ckx2M25ESzZHaDgxN1pjWmpqUVV5em13RlVvdzZhZDV1T1Jabzg2Q0tsNW52RnF3VjRYL2E4OGx2S1grc2xqWDkKSDZGR2Y2bm1uM2JMTnlXWWEreThGcllUMHBQR2x3aG5qWE1WSkJlUW9SS2NiK2hySERPZlNGZ0xsZjQ0TWR1VwpPd3Vmb2VTYnJpL3hoZ0ExMXhqbStmVGJNV3ZkNkZVM0h6ZW9WeEtsdVJNcmJVL0YySHFVN0R1ZEV6dUNQUWFsCk1OOUxiblZJcUtwREp5VzhmODY1V29MUHJlWjhMZkZqMVQvMXl2ZEk1dkJwTFBKc0NZUndLdndSTEhZajAzTHMKRVA5QlpuRkhNRDYwV3RuZXc4bkdaRjJkWTdIRHZRa1V2M2hoemtVMXRLa3BncWhvM2tCUytoUUNwUEpLMzZLMgplOG9aT2NrTDJsYjJzTmpBck84Q0F3RUFBYU1qTUNFd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFJTnFSMXYwUGVPKy9TR05OcXN6S2MzNHk5RGkKVjA5dFZoemRRNEV6aGtoM0ZOS0RRMDZ0VTNPcUw2dzREano2SnlwSW9IaGVsTXVxVmJtY0V5WE9WNzYwZ0hPRQpJaWJ0ZlJhcVdPMVc2RXE0NklQbjEwZkFWNzRwNjhVcWdQdjkra0RSb2grYWhobFJFTGJJdTJNcjAzNHBjcWduClZSK01lWGZ6Q0VvalF3dzd0ZVJGNnpMTCtQa0duNHI4L24rNFNGUjJIK2lDVCtiVzNxZWdCYi9MWWwyTmNMTHMKVDEvcnROZnFTaEIyV2dYbXZKUkl2YXRIWWtUdUZCN1IwZ0pkQUJJWXdkSGlpbVN4TkdDK05WRzIzL3BDdmRKUApFcjFPd2xuWFBMSStiOHpXNDNEanVjd0pPdTY0alZEVmduNUpJUDZqNjRuYnN2eC9VSkIvOUZNK0lVST0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://172.31.43.3:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM4akNDQWRxZ0F3SUJBZ0lJY0trbWVQMXNaZnd3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TURBek1EY3dPVEV6TWpGYUZ3MHlNVEF6TURjd09URXpNak5hTURReApGekFWQmdOVkJBb1REbk41YzNSbGJUcHRZWE4wWlhKek1Sa3dGd1lEVlFRREV4QnJkV0psY201bGRHVnpMV0ZrCmJXbHVNSUlCSWpBTkJna3Foa2lHOXcwQkFRRUZBQU9DQVE4QU1JSUJDZ0tDQVFFQXhTbFZmb1IyVTQ2UGdCbzAKR2kyL2NROFEzVldCcVpaNzRwM3cxZ1pDS2dzaUhya3RGWTdrTm52Y3hLNXVPRjZIN1YxS0JrYmRUNXZvVlZ2YQpFRlY3TU5RZUZ6RDEzWkFKK2dOVFN5RFUrY21qT2xnQW1xMktZeHdKbTNBNUdnNFRSbVpUN01mS3FxMVc4V2lxClZlWkY1cnViUkdpb3Z0WWR5L3BHUEs1b0dJaWtpd2w0QU9SMXFGRG80ejR3SmtyMEd5OUxSSzhNZ0RkeEhrSk0KQklrZ2QrbnFpODBGZUpLM2JzWTBjUG9LYk9QbEx4Vm9XQW5iUWEyNjVqYXBQbitNdEpKWkdRelFwYXhranE5RApvek1Pa3pnV0dQMFZKcC9CUXFINGI5NTFXaUFpNTMwbVlvVTVRUDJwaFR6amtUbG1PQlErd3hoZDNKaU9TdjUwCkVmdkdHUUlEQVFBQm95Y3dKVEFPQmdOVkhROEJBZjhFQkFNQ0JhQXdFd1lEVlIwbEJBd3dDZ1lJS3dZQkJRVUgKQXdJd0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFIdSt5MjRxa2F0Y21rZkJYRUtrUXg1SUdvNm9Ud0FIcnRqdQo5SUw1MTZ4cVZPMlUvblAwM3hqbHBVdkFSR1dSU3czRjZsanNkUTM5VS9DMGQ2SVNGT0t4K2VkMFE5MVptYW03CnNib0liaXJSeDdVa3ErdThoS3dRK1Zad1Z0akdLUWYwclB2STFkb2drcHJldkF2Myt3OUdld3p5Y0NqemxIbE0KU09pdFdYYkdpdzBoWmk3a25lYmdMQVEvdkVVSlFrNFNVK21oMTJIaVNZY0R2WlJOZkJOUzNONnpPMnZXUGFrcwpFMVIvZ1BBTmlMMllTSXpnQVAwSyszTzJGVzc1SndLa3dXUlNEM1NIZWQxbTZIYlVGcTlBUEdWOXB1eHJTZXJoCkF0T2QzbTdIUnRCS3Q1L29ZaUNva1NBRjZIR1hJcCtEYTFBMFZQRkU0YlVkQjl5MUlHWT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcEFJQkFBS0NBUUVBeFNsVmZvUjJVNDZQZ0JvMEdpMi9jUThRM1ZXQnFaWjc0cDN3MWdaQ0tnc2lIcmt0CkZZN2tObnZjeEs1dU9GNkg3VjFLQmtiZFQ1dm9WVnZhRUZWN01OUWVGekQxM1pBSitnTlRTeURVK2Ntak9sZ0EKbXEyS1l4d0ptM0E1R2c0VFJtWlQ3TWZLcXExVzhXaXFWZVpGNXJ1YlJHaW92dFlkeS9wR1BLNW9HSWlraXdsNApBT1IxcUZEbzR6NHdKa3IwR3k5TFJLOE1nRGR4SGtKTUJJa2dkK25xaTgwRmVKSzNic1kwY1BvS2JPUGxMeFZvCldBbmJRYTI2NWphcFBuK010SkpaR1F6UXBheGtqcTlEb3pNT2t6Z1dHUDBWSnAvQlFxSDRiOTUxV2lBaTUzMG0KWW9VNVFQMnBoVHpqa1RsbU9CUSt3eGhkM0ppT1N2NTBFZnZHR1FJREFRQUJBb0lCQVFDQ2s1bDNyU3JncyszKwpIVnljYWVmOGJNbnlqSXJQVWtiQ0UzQkpqdU9MRE15UUpIdmpaenRsaWlyd1o4Vy90M3Uyaks1VjhlRG90SXp1CjIySlVwd2hya2xCTGM3V2lBNTlYNFpQc2tkWDdpTHQrRElKNTdxMVVibUUrZk5pVWxQWFhEalpPL3hNT2JyYkMKTTF0OGdJR1RDblVPblhJRTBiSHlRZEw2cFZkenh3Ri9EeFNNTy9zOGxLOEh3K0RzT0xxU3FPbHoyOUpuYk9CeAp1aEMzK3VMalc4Rmpsblh6K25JQWRaWFZoRkp0dG43a1dkak1jZXkyTGZCc1NZbGZlWlhZaTRGTE8xbmNPWGpuCkYwLzNhU2g0UmtPeXZvZDZRSEVxTmFnS0ZPOUZqd29hQzRmWkxLQjBrTG16UlZYa1BiR2lDRXB1N1ozSEw0c3UKaFRaYTNUekJBb0dCQU8zMXlBWDVtYTR2U3FlK2V5eEx2L201WEhtb2QweDhXNUFkcU51ZzNuRjdUSE4zMXppbQpmYVBwTjd4R2lwcXNwMVlGQzBheC9FZDNJYW1RcWVSRlRtTHgrRmttb3NNSThBbUV2U0EvL0JTVWVhYTUzeWtwCkt1NXEzNFBWWW5OSXZpcWpTM1ZITERGckw5MlUzNnVBTk9uMTJwZUw3ek1kOXVOT0srNlV3L20xQW9HQkFOUWIKd0g0RWRUbVAwS2V5V0hmYlBheFhxSVJqV2xLeFhHTU5JcnRVZWNLQ0NadWFTNnE5TFYxWk5KZkMyamN3TFRKMApDMVB2RkNjWjAwRUFScGlkS2lYL0ZaQzloRHZ6TkpsUnRseGs0aGVZVUVoa0lQL1RtcVUvTWZhSEhBREhlbDNCCkNPL1BuUnU5Y3g0NmwxZjBOcm5XRVJoa2J5TTJ4Mzc1ek5xb0tJbFZBb0dBUzhxKy9QZzFOTCtuWGFwVC9SWGIKZmFUR2laRlkvaW1WMkY4NkMwby96NUZnRmw4VFU5M2pvck9EcHhvb3gzODZoVEZ5R0FCVXhFWnptRmlWWkRtVwo3L2oyQ3g4OU5EWENqcVdTdjVUaHE0Um5BdTJzNEtWV0lUNDFGdjUrTHczNlZBWlM0SFhjNDVpcVZEODR4cDA5ClBVK3JZaDJXQUlnSXZQbUhFS1NkandrQ2dZQm53dHU3eWZwK21qZjhrV1p0MjdhajVJM3ZsWnJOOFMyODF1UXkKdC9TSWpveWNyakp0NS9XVlFOcFZrMkNrdHRDbGFkZFF6QmdUdUxKN2plTDdMWWM4NXpocGdneDZOMU4zM1YxVQpmWldNN1ZuNHorTEV3NE5YYXo3SjF2Wi8reFdGWDdVN2UxamtCUjJYb0JvQlVOcWt0bS9PZXZOVFNxejFGTVorCkFOMHpzUUtCZ1FDaDROSlEvVjhhc3prOURnZ2F5bnZ1Z2JWWVg1R0lFNGRSRng3Z3dXek5BckI0V1pUODVHeDgKSzByN3BLdTJsYmh2OFE1UU9GdFFhS0JwcCtjb1g2a3cvbTJZdWdYeVdiREpScEY4ODJXbkQzYWhvbW10WTlXZgpOWmJkeGRXNk8xZ1dURTg1ODV3YW5uOWFZR3g5Q21xNDJ4Sk9SaURPakFZWWEyR3phTHI2SHc9PQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

命令行工具kubectl基本命令参数

[centos@k8s-01 ~]$ kubectl

kubectl controls the Kubernetes cluster manager.

Find more information at: https://kubernetes.io/docs/reference/kubectl/overview/

Basic Commands (Beginner):

create Create a resource from a file or from stdin.

expose Take a replication controller, service, deployment or pod and expose it as a new

Kubernetes Service

run Run a particular image on the cluster

set Set specific features on objects

Basic Commands (Intermediate):

explain Documentation of resources

get Display one or many resources

edit Edit a resource on the server

delete Delete resources by filenames, stdin, resources and names, or by resources and

label selector

Deploy Commands:

rollout Manage the rollout of a resource

scale Set a new size for a Deployment, ReplicaSet or Replication Controller

autoscale Auto-scale a Deployment, ReplicaSet, or ReplicationController

Cluster Management Commands:

certificate Modify certificate resources.

cluster-info Display cluster info

top Display Resource (CPU/Memory/Storage) usage.

cordon Mark node as unschedulable

uncordon Mark node as schedulable

drain Drain node in preparation for maintenance

taint Update the taints on one or more nodes

Troubleshooting and Debugging Commands:

describe Show details of a specific resource or group of resources

logs Print the logs for a container in a pod

attach Attach to a running container

exec Execute a command in a container

port-forward Forward one or more local ports to a pod

proxy Run a proxy to the Kubernetes API server

cp Copy files and directories to and from containers.

auth Inspect authorization

Advanced Commands:

diff Diff live version against would-be applied version

apply Apply a configuration to a resource by filename or stdin

patch Update field(s) of a resource using strategic merge patch

replace Replace a resource by filename or stdin

wait Experimental: Wait for a specific condition on one or many resources.

convert Convert config files between different API versions

kustomize Build a kustomization target from a directory or a remote url.

Settings Commands:

label Update the labels on a resource

annotate Update the annotations on a resource

completion Output shell completion code for the specified shell (bash or zsh)

Other Commands:

api-resources Print the supported API resources on the server

api-versions Print the supported API versions on the server, in the form of "group/version"

config Modify kubeconfig files

plugin Provides utilities for interacting with plugins.

version Print the client and server version information

Usage:

kubectl [flags] [options]

Use "kubectl <command> --help" for more information about a given command.

Use "kubectl options" for a list of global command-line options (applies to all commands).

[centos@k8s-01 ~]$

内容引用

https://www.digitalocean.com/community/tutorials/how-to-create-a-kubernetes-cluster-using-kubeadm-on-centos-7